WARNING: This repository is no longer maintained ⚠️

This repository will not be updated. The repository will be kept available in read-only mode.

Build an AI Powered AR Character in Unity with AR Foundation

This pattern was originally published using ARKit and only available on iOS. With Unity introducing AR Foundation, this pattern can now run on either ARKit or ARCore depending on what device you build for.

In this Code Pattern we will use Assistant, Speech-to-Text, and Text-to-Speech deployed to an iPhone or an Android phone, using either ARKit or ARCore respectively, to have a voice-powered animated avatar in Unity.

Augmented reality allows a lower barrier to entry for both developers and end-users thanks to framework compatibility in phones and digital eyewear. Unity’s AR Foundation continues to lower the barrier for developers, allowing a single source code for a Unity project to take advantage of ARKit and ARCore.

For more information about AR Foundation, take a look at Unity’s blog.

When the reader has completed this Code Pattern, they will understand how to:

- Add IBM Watson Speech-to-Text, Assistant, and Text-to-Speech to Unity with AR Foundation to create an augmented reality experience.

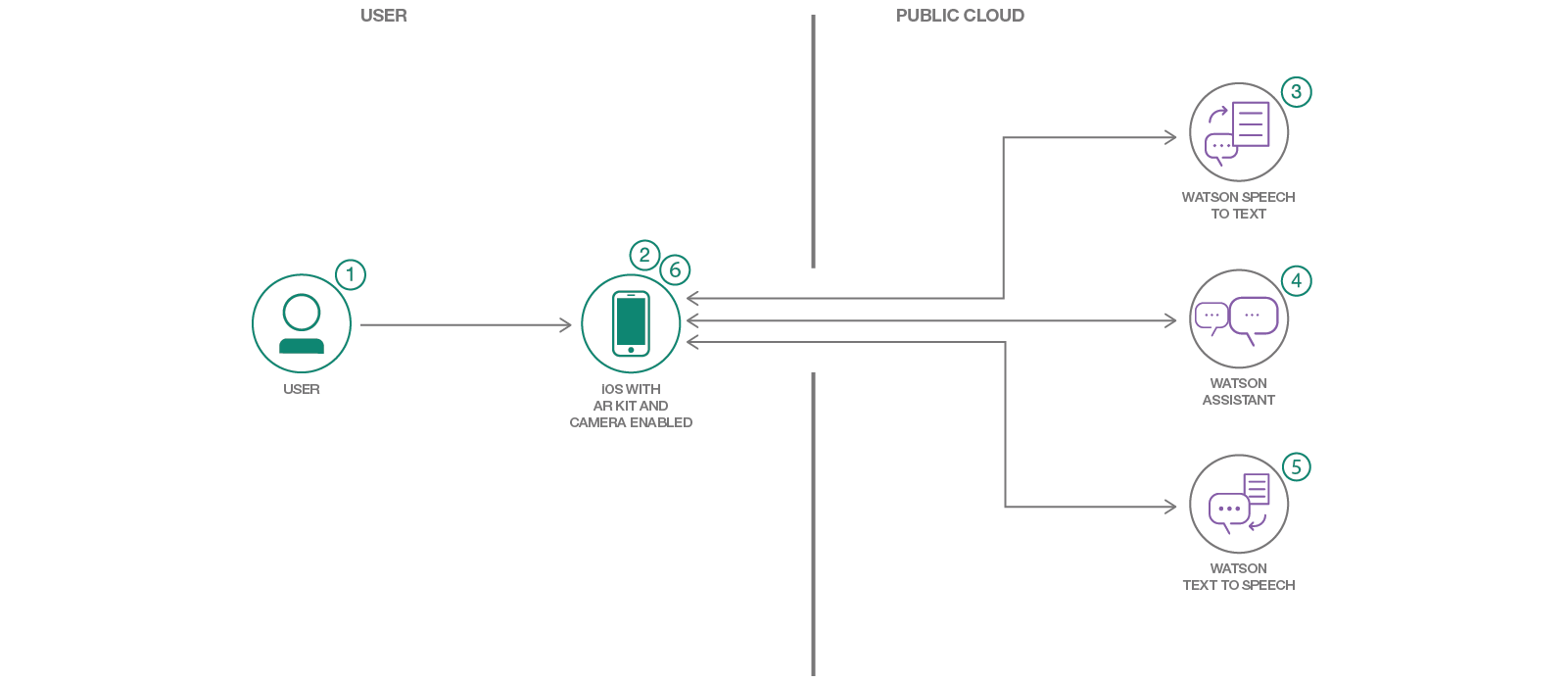

Flow

- User interacts in augmented reality and gives voice commands such as “Walk Forward”.

- The phone microphone picks up the voice command and the running application sends it to Watson Speech-to-Text.

- Watson Speech-to-Text converts the audio to text and returns it to the running application on the phone.

- The application sends the text to Watson Assistant. Watson assistant returns the recognized intent “Forward”. The intent triggers an animation state event change.

- The application sends the response from Watson Assistant to Watson Text-to-Speech.

- Watson Text-to-Speech converts the text to audio and returns it to the running application on the phone.

- The application plays the audio response and waits for the next voice command.

Included components

- IBM Watson Assistant: Create a chatbot with a program that conducts a conversation via auditory or textual methods.

- IBM Watson Speech-to-Text: Converts audio voice into written text.

- IBM Watson Text-to-Speech: Converts written text into audio.

Featured technologies

- Unity: A cross-platform game engine used to develop video games for PC, consoles, mobile devices and websites.

- AR Foundation: A Unity package for AR functionality to create augmented reality experiences.

Steps

1. Before You Begin

- IBM Cloud Account

- Unity

2. Create IBM Cloud services

On your local machine:

git clone https://github.com/IBM/Watson-Unity-ARKit.gitcd Watson-Unity-ARKit

In IBM Cloud:

- Create a Speech-To-Text service instance.

- Create a Text-to-Speech service instance.

- Create an Assistant service instance.

- Once you see the services in the Dashboard, select the Assistant service you created and click the

Launch Tool. - After logging into the Assistant Tool, click

Create a Skill. - Click

Create skillbutton. - Click “Import skill”.

- Import the Assistant

voiceActivatedMotionSimple.jsonfile located in your clone of this repository. - Once the skill has been created, we’ll need to add it to an Assistant. If you have opend your skill, back out of it. Click

Assistants. - Click

Create Assistant. - Name your assistant, click

Create assistant. - Click

Add dialog skillto add the skill you just imported to this Assistant. - Click the

...menu in the top and click “Settings” to see the Assistant Settings. - Click

API Detailsand find your Assistant Id. You will need this in the next section.

3. Building and Running

Note: This has been compiled and tested using Unity 2018.3.0f2 and Watson SDK for Unity 3.1.0 (2019-04-09) & Unity Core SDK 0.2.0 (2019-04-09).

Note: If you are in any IBM Cloud region other than US-South/Dallas you must use Unity 2018.2 or higher. This is because Unity 2018.2 or higher is needed for TLS 1.2, which is the only TLS version available in all regions other than US-South.

The directories for unity-sdk and unity-sdk-core are blank within the Assets directory, placeholders for where the SDKs should be. Either delete these blank directories or move the contents of the SDKs into the directories after the following commands.

- Download the Watson SDK for Unity or perform the following:

git clone https://github.com/watson-developer-cloud/unity-sdk.git

Make sure you are on the 3.1.0 tagged branch.

- Download the Unity Core SDK or perform the following:

git clone https://github.com/IBM/unity-sdk-core.git

Make sure you are on the 0.2.0 tagged branch.

- Open Unity and inside the project launcher select the button.

- If prompted to upgrade the project to a newer Unity version, do so.

- Follow these instructions to add the Watson SDK for Unity downloaded in step 1 to the project.

- Follow these instructions to create your Speech To Text, Text to Speech, and Watson Assistant services and find your credentials using IBM Cloud

Please note, the following instructions include scene changes and game objects have been added or replaced for AR Foundation.

- In the Unity Hierarchy view, click to expand under

AR Default Plane, clickDefaultAvatar. If you are not in the Main scene, clickScenesandMainin your Project window, then find the game objects listed above. - In the Inspector you will see Variables for

Speech To Text,Text to Speech, andAssistant. If you are using US-South or Dallas, you can leave theAssistant URL,Speech to Text URL, andText To Speech URLblank, taking on the default value as shown in the WatsonLogic.cs file. If not, please provide the URL values listed on the Manage page for each service in IBM Cloud. - Fill out the

Assistant Id,Assistant IAM Apikey,Speech to Text Iam Apikey,Text to Speech Iam Apikey. All Iam Apikey values are your API key or token, listed under the URL on the Manage page for each service.

Building for iOS

Build steps for iOS have been tested with iOS 11+ and Xcode 10.2.1.

- To Build for iOS and deploy to your phone, you can File -> Build Settings (Ctrl + Shift +B) and click Build.

- When prompted you can name your build.

- When the build is completed, open the project in Xcode by clicking on

Unity-iPhone.xcodeproj. - Follow steps to sign your app. Note - you must have an Apple Developer Account.

- Connect your phone via USB and select it from the target device list at the top of Xcode. Click the play button to run it.

- Alternately, connect the phone via USB and File-> Build and Run (or Ctrl+B).

Building for Android

Build steps for Android have been tested with Pie on a Pixel 2 device with Android Studio 3.4.1.

- To Build for Android and deploy to your phone, you can File -> Build Settings (Ctrl + Shift +B) and click Switch Platform.

- The project will reload in Unity. When done, click Build.

- When prompted you can name your build.

- When the build is completed, install the APK on your emulator or device.

- Open the app to run.

Links

- Watson Unity SDK

- Unity Core SDK

Troubleshooting

AR features are only available on iOS 11+ and can not run on an emulator/simulator. Be sure to check your player settings to target minimum iOS device of 11, and your Xcode deployment target (under deployment info) to be 11 also.

In order to run the app you will need to sign it. Follow steps here.

Mojave updates may adjust security settings and block microphone access in Unity. If Watson Speech to Text appears to be in a ready and listening state but not hearing audio, make sure to check your security settings for microphone permissions. For more information: https://support.apple.com/en-us/HT209175.

You may need the ARCore APK for your Android emulator. This pattern has been tested with ARCore SDK v1.9.0 on a Pixel 2 device running Pie.

Learn more

- Artificial Intelligence Code Patterns: Enjoyed this Code Pattern? Check out our other AI Code Patterns.

- AI and Data Code Pattern Playlist: Bookmark our playlist with all of our Code Pattern videos

- With Watson: Want to take your Watson app to the next level? Looking to utilize Watson Brand assets? Join the With Watson program to leverage exclusive brand, marketing, and tech resources to amplify and accelerate your Watson embedded commercial solution.

License

This code pattern is licensed under the Apache Software License, Version 2. Separate third party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 (DCO) and the Apache Software License, Version 2.